Data labeling is the process of tagging raw data such as images, text, video, or audio so machines can understand and learn from it. It is the foundation of artificial intelligence and machine learning because models depend on accurate labels to make correct predictions. In this 20th era, data labeling has evolved from manual annotation to AI-assisted workflows, making the process faster, more accurate, and scalable. This post explains what data labeling is, why it matters, the latest tools, and best practices to help businesses and data teams get better results.

What is Data Labeling?

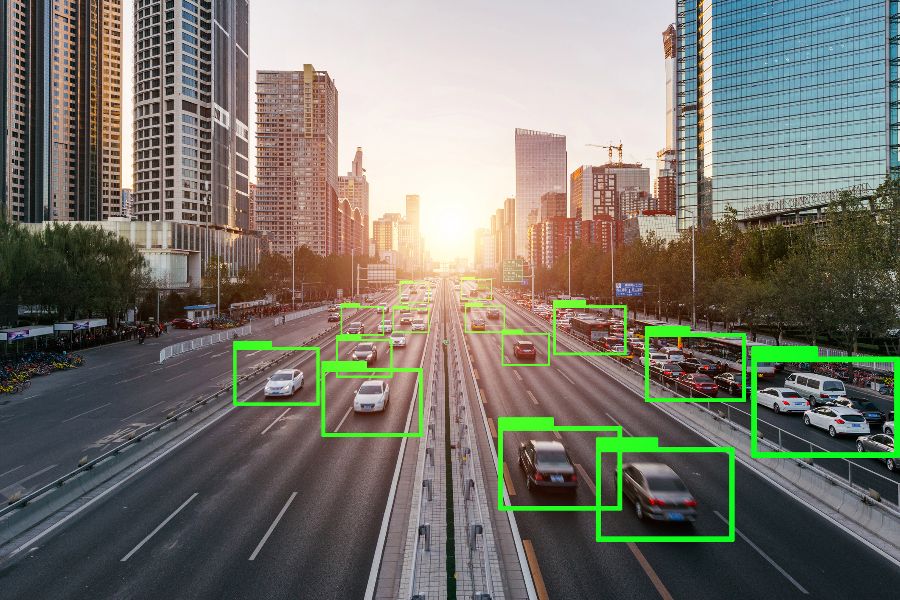

Data labeling (or annotation) is the process of tagging raw data—images, text, audio, video—with labels or metadata so machines can understand patterns. For example, labeling pictures of traffic lights with tags like “red”, “green”, or “yellow” helps train computer-vision systems to recognize lights.

Why Data Labeling Matters More Now

- It provides accurate “ground truth” that machine learning models need. Without correct labels, models learn wrong patterns.

- It improves usability of data—helps turn raw inputs into structured form that analysts or AI can work with.

- It supports better predictions. With quality labeling, AI/ML models tend to perform more reliably.

How AI is Streamlining Data Labeling

AI and automation have joined human effort to make data labeling faster, less error-prone, and more scalable. From the top ranking sources:

- Automatic / AI-assisted labeling tools can propose labels for simple or repetitive data (images, text), so humans only need to correct where needed. This boosts speed and consistency.

- Semi-automated workflows combine machine suggestions with human oversight. For example: error detection, quality checks, or refining label boundaries.

- Tools with version control, collaboration, and data management help teams work together, track changes, and maintain annotation quality.

Key Tools and Platforms to Watch in 2025

Here are some of the top tools being used now, based on current SERP sources:

Get TikTok SEO Cheat here

| Tool / Platform | What Makes It Strong / Use Cases |

|---|---|

| SuperAnnotate | Works for multimodal data (images, video, text). Strong for teams needing collaboration, review, and evaluation. |

| Label Studio | Flexible tool with support for different data types (text, image, audio). Open source friendly. |

| Dataloop | Good for large-scale annotation, dataset versioning, quality control. Great when datasets are big and evolving. |

Read also:

- Best Public Relations Agencies in Nigeria

- How to enable or disable web browser’s pop-up blocker

- Best Digital Marketing Agency in Nigeria

- How to convert pdf to word

- How to Add or Remove Debit/Credit Card on Facebook Ads

- Joomla vs WordPress vs Drupal – Which One is Better? (Pros and Cons)

- Content Marketing and its importance to business

Best Practices for Effective Data Labeling

To get value from data labeling, following practices help avoid common mistakes:

- Define clear labeling guidelines before starting — specify what each label means, avoid ambiguity.

- Use human review even when using automated tools. Humans help catch edge cases.

- Ensure consistency — labelers should follow the same criteria. Use review cycles.

- Measure and monitor quality — track error rates, re-labeling needed, inter-annotator agreement.

- Build scalable workflows — allow for dataset growth, changing classes, and updates.

Challenges to Watch

While AI helps, there are still hurdles:

- Labeling errors: misclassifications, subjective labels, poor quality control.

- High cost for large datasets if many rare labels are needed.

- Tooling complexity: managing versions, different formats, and integrations with ML pipelines.

- Privacy, data security concerns, especially with sensitive data (medical, financial, etc.).

Conclusion

Currently, data labeling isn’t just a preliminary step in AI, it has become core to model performance. AI-assisted tools are making labeling faster and more consistent. But success depends on following best practices: clear guidelines, human review, scalable workflows.

If you are building or managing ML work, investing properly in data labeling tools and quality will improve predictions, reduce costs, and speed deployment.